Would you trust a machine to perform procedures?

Purpose of Review

To assess the state-of-the-art in research on trust in robots and to examine if recent methodological advances can aid in the development of trustworthy robots.

Recent Findings

While traditional work in trustworthy robotics has focused on studying the antecedents and consequences of trust in robots, recent work has gravitated towards the development of strategies for robots to actively gain, calibrate, and maintain the human user’s trust. Among these works, there is emphasis on endowing robotic agents with reasoning capabilities (e.g., via probabilistic modeling).

Summary

The state-of-the-art in trust research provides roboticists with a large trove of tools to develop trustworthy robots. However, challenges remain when it comes to trust in real-world human-robot interaction (HRI) settings: there exist outstanding issues in trust measurement, guarantees on robot behavior (e.g., with respect to user privacy), and handling rich multidimensional data. We examine how recent advances in psychometrics, trustworthy systems, robot-ethics, and deep learning can provide resolution to each of these issues. In conclusion, we are of the opinion that these methodological advances could pave the way for the creation of truly autonomous, trustworthy social robots.

Introduction

On July 2, 1994, USAir Flight 1016 was scheduled to land in the Douglas International Airport in Charlotte, NC. Upon nearing the airport, the plane experienced inclement weather and was affected by wind shear (a sudden change in wind velocity that can destabilize an aircraft). On the ground, a wind shear alert system installed at the airport issued a total of three warnings to the air traffic controller. But due to a lack of trust in the alert system, the air traffic controller transmitted only one of the alarms that was, unfortunately, never received by the plane. Unaware of the presence of wind shear, the aircrew failed to react appropriately and the plane crashed, killing 37 people [1] (see Fig. 1). This tragedy vividly brings to focus the critical role of trust in automation (and by extension, robots): a lack of trust can lead to disuse, with potentially dire consequences. Had the air traffic controller trusted the alert system and transmitted all three warnings, the tragedy may have been averted.

Human-robot trust is crucial in today’s world where modern social robots are increasingly being deployed. In healthcare, robots are used for patient rehabilitation [2] and to provide frontline assistance during the on-going COVID-19 pandemic [3, 4]. Within education, embodied social robots are being used as tutors to aid learning among children [5]. The unfortunate incident of USAir Flight 1016 highlights the problem of undertrust, but maximizing a user’s trust in a robot may not necessarily lead to positive interaction outcomes. Overtrust [6, 7] is also highly undesirable, especially in the healthcare and educational settings above. For instance, [6] demonstrated that people were willing to let an unknown robot enter restricted premises (e.g., by holding the door for it), thus raising concerns about security, privacy, and safety. Perhaps even more dramatically, a study by [7] showed that people willingly ignored the emergency exit sign to follow an evacuation robot taking a wrong turn during a (simulated but realistic) fire emergency, even when said robot performed inefficiently prior to the start of the emergency. These examples drive home a key message: miscalibrated trust can lead to misuse of robots.

A Question of Trust

To obtain a meaningful answer to our central question, we need to ascertain exactly what is meant by “trust in a robot.” Is the notion of trust in automated systems (e.g., a wind-shear alert system) equivalent to trust in a social robot? How is trust different from concepts such as trustworthiness and reputation?

Historically, trust has been studied with respect to automated systems [11, 12, 14]. Since then, much effort has been expended to extend the study of trust to human-robot interaction (HRI) (see Fig. 2 for an overview). We use the term “robots” to refer to embodied agents with a physical manifestation that are meant to operate in noisy, dynamic environments [8]. An automated system, on the other hand, may be a computerized process without an explicit physical form. While research on trust in automation can inform our understanding of trust in robots [11], this robot-automation distinction (which is, admittedly, not quite sharp) has important implications for the way we conceptualize trust. For one, the physical embodiment of a robot makes its design a key consideration in the formation of trust. Furthermore, we envision social robots would be typically deployed in dynamic unstructured environments and have to work alongside human agents. This suggests that the ability to navigate uncertainty and social contexts plays a greater role in the formation and maintenance of human trust.

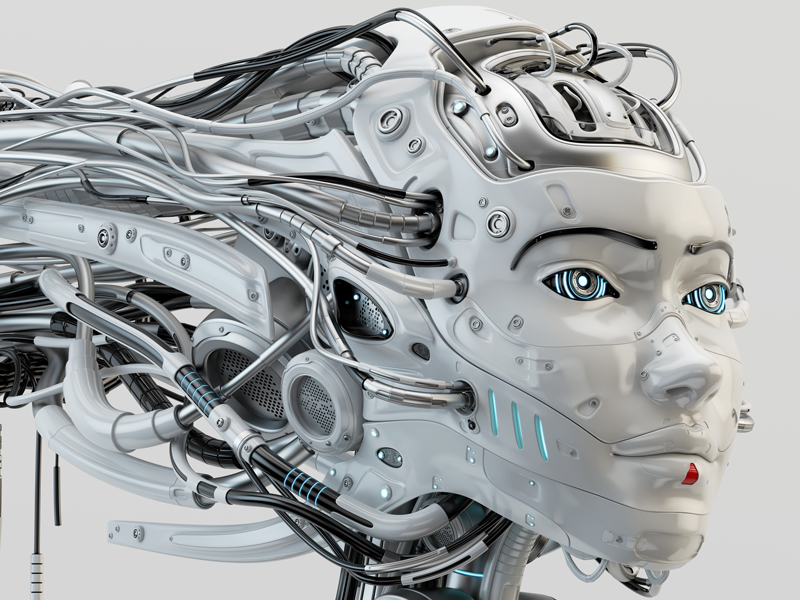

Start with Design

A first step to establish human-robot trust is to design the robot in a way that biases people to trust it appropriately. Conventionally, one way to do so is by configuring the robot’s physical appearance [11]. With humans, our decision to trust a person often hinges upon first impressions [30]. The same goes for robots—people often judge a robot’s trustworthiness based on its physical appearance [11, 31,32,33]. For instance, robots that have human-like characteristics tend to be viewed as more trustworthy [31], but only up to a certain point. Robots that appear to be highly similar, but still quite distinguishable, from humans are seen as less trustworthy [32, 33]. This dip in perceived trustworthiness then recovers for robots that appear indistinguishable from humans. This phenomena—called the “uncanny valley” [8, 32, 33] due to the U-shaped function relating perceived trustworthiness to robot anthropomorphism—has important implications for the design of social robots.